The Best 3D Conference

Story Highlights

![]() Do you think you know something about stereoscopic 3D? Test yourself with the six basic journalistic questions: who, what, when, where, how, and why.

Do you think you know something about stereoscopic 3D? Test yourself with the six basic journalistic questions: who, what, when, where, how, and why.

Who wrote the first article on holographic television to appear in the SMPTE Journal? What is cyclovergence? When is a seven-second stereo delay appropriate? Where can lens centering be corrected instantly in any stereoscopic camera rig without digital processing? How does viewing distance affect scene depth? And why doesn’t pseudostereo destroy depth perception?

All of those questions, and many more, were answered last month at the Society of Motion-Picture and Television Engineers’ (SMPTE’s) 2nd-annual International Conference on Stereoscopic 3D for Media & Entertainment, held in New York’s Hudson Theater (left, photo by Ken Carroza). From my point of view, it was the best stereoscopic-3D event that has ever taken place anywhere.

All of those questions, and many more, were answered last month at the Society of Motion-Picture and Television Engineers’ (SMPTE’s) 2nd-annual International Conference on Stereoscopic 3D for Media & Entertainment, held in New York’s Hudson Theater (left, photo by Ken Carroza). From my point of view, it was the best stereoscopic-3D event that has ever taken place anywhere.

Full disclosure: As a member of the trade press, I was admitted free (and got some free food). The same is true at most conferences I attend. And the complimentary entry and food has never stopped me from panning events I didn’t like. This one I loved!

The thrills started with the very first presentation, “Getting the Geometry Right,” by Jenny Read, a research fellow at the Institute of Neuroscience at Newcastle University. Read’s Oxford doctorate was in theoretical astrophysics before she moved into visual neuroscience, spending four years at the U.S. National Institutes of Health.

I’ve provided that snippet of her biographical info here as a small taste of the caliber of the presenters at the conference. There were many other vision scientists, but other presenters were associated with movie studios, manufacturers, shooters, and service providers. And the audience gamut also ran from engineering executives at television networks and movie studios to New York public-access-cable legend Ugly George (right; click picture to expand).

I’ve provided that snippet of her biographical info here as a small taste of the caliber of the presenters at the conference. There were many other vision scientists, but other presenters were associated with movie studios, manufacturers, shooters, and service providers. And the audience gamut also ran from engineering executives at television networks and movie studios to New York public-access-cable legend Ugly George (right; click picture to expand).

Back to Dr. Read’s presentation, I cannot confirm that everyone in the audience did the same, but, as far as I could see, attendees were taking notes frantically almost as soon as she started speaking. Consider, for example, cyclovergence. Everyone knows our eyes can pivot up & down (around the x-axis) and left & right (around the y-axis), but did you know they can also rotate clockwise & counterclockwise (around the z-axis)? That’s cyclovergence, and it’s actually common.

Back to Dr. Read’s presentation, I cannot confirm that everyone in the audience did the same, but, as far as I could see, attendees were taking notes frantically almost as soon as she started speaking. Consider, for example, cyclovergence. Everyone knows our eyes can pivot up & down (around the x-axis) and left & right (around the y-axis), but did you know they can also rotate clockwise & counterclockwise (around the z-axis)? That’s cyclovergence, and it’s actually common.

The main topic of Read’s presentation was vertical disparities between the two eye views. Those caused by camera or lens misalignments are typically processed out, but ordinary vision includes vertical disparities introduced by eye pointing.

At right is an illustration from Peter Wilson and Kommer Kleijn’s presentation last year at the International Broadcasting Convention (IBC), “Stereoscopic Capture for 2D Practitioners.” If our eyes converge on something, the theoretical rectangular plane of convergence becomes two trapezoids with vertical disparities. So vertical disparities are not necessarily problematic for human vision.

At right is an illustration from Peter Wilson and Kommer Kleijn’s presentation last year at the International Broadcasting Convention (IBC), “Stereoscopic Capture for 2D Practitioners.” If our eyes converge on something, the theoretical rectangular plane of convergence becomes two trapezoids with vertical disparities. So vertical disparities are not necessarily problematic for human vision.

Read showed other ways vertical disparities can get introduced. At left is what two eyes would see if looking towards the right (as might be the case when sitting to the left of a stereoscopic display screen). Then she explained how our brains convert vertical disparity information into depth information, so an oblique screen view can change the appearance of people into something seeming like living cut-out puppets.

Read showed other ways vertical disparities can get introduced. At left is what two eyes would see if looking towards the right (as might be the case when sitting to the left of a stereoscopic display screen). Then she explained how our brains convert vertical disparity information into depth information, so an oblique screen view can change the appearance of people into something seeming like living cut-out puppets.

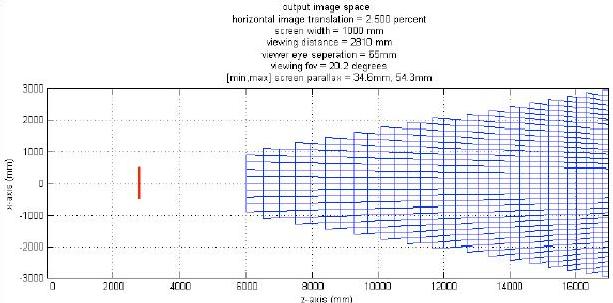

That was clearly not the only mechanism for changing apparent depth. Below is a graph from “Effect of Scene, Camera, and Viewing Parameters on the Perception of 3D Imagery,” presented by Brad Collar of Warner Bros. and Michael D. Smith. They showed depth in a scene, what it looks like when seen on a cinema-sized screen, and what it looks like on other screens, such as those used for home viewing. The depth collapsed from its cinema look to its home look, effectively going from normal character roundness to the appearance of cardboard cut outs. But the graph below shows what happened after processing to restore the depth. The roundness came back, but everything was pushed behind the screen (the red vertical line).

Some viewers have complained about miniaturization in 3D TV, such as burly football players looking like little dolls. But our visual systems can perform amazing feats of depth correction.

In a presentation titled “Depth Cue Interactions in Stereoscopic 3D Media,” Robert Allison of York University noted a few of the cues viewers use for depth perception, including occlusion and perspective. Then he showed a real-world 3D scene. It appeared to be in 3D, and it offered a stereoscopic sensation, though something seemed to be wrong. In fact, it was intentionally pseudostereoscopic, with left- and right-eye views reversed. But the non-stereoscopic depth cues kept the apparent depth correct. Later he showed how even just contrasty lighting can increase apparent depth sensation.

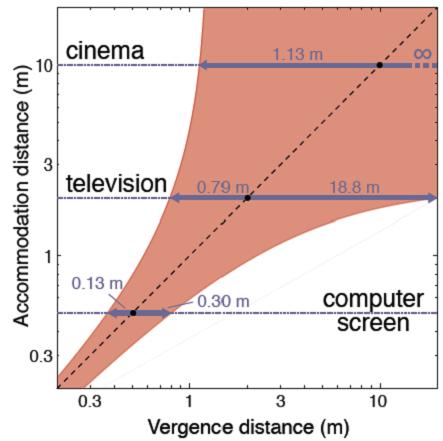

Of course, lost roundness (and associated miniaturization) aren’t the only perceptual issues associated with stereoscopic 3D. In “Focusing and Fixating on Stereoscopic Images: What We Know and Need to Know,” Simon Watt of Bangor University showed some of the latest information on viewer discomfort caused by a conflict between vergence (the distance derived from where the eyes point) and accommodation (the distance derived from what the eyes are focused on, which is the screen).

Of course, lost roundness (and associated miniaturization) aren’t the only perceptual issues associated with stereoscopic 3D. In “Focusing and Fixating on Stereoscopic Images: What We Know and Need to Know,” Simon Watt of Bangor University showed some of the latest information on viewer discomfort caused by a conflict between vergence (the distance derived from where the eyes point) and accommodation (the distance derived from what the eyes are focused on, which is the screen).

The chart at right is based on some of the latest work from the Visual Space Perception Laboratory at the University of California – Berkeley. It shows that the approximate comfort zone is based, as might be expected, only on viewing distance. In a cinema, viewers should be comfortable with depth going away from them to infinity and coming out of the screen almost to hit them on their faces. At a TV-viewing distance, even far depth can be uncomfortable. At a computer-viewing distance, the comfort range is smaller still.

Viewing distances for handheld devices should result in even narrower comfort zones, but that’s only if they’re stereoscopic. There are other options that were discussed at the SMPTE conference. Ichiro Kawakami of Japan’s National Institute of Information and Communications Technology (NICT) described a glasses-free 200-projector-based autostereoscopic display. Douglas Lanman of the MIT Media Lab actually brought a demonstration of a layered light-field system that attendees could hold. As shown below (click for a larger view, more info here: http://www.layered3d.info), they have come up with a mechanism for reproducing the original light field.

On the same day that The New York Times reported on a camera that allows focus to be controlled after a picture is taken (http://www.nytimes.com/2011/06/22/technology/22camera.html), Professor Marc Levoy of Stanford University explained to attendees at the SMPTE conference how it’s done and the application of “computational cinematography” to 3D. And then there’s holography.

Mark Lucente of Zebra Imaging gave a presentation titled “The First 20 Years of Holographic Video — and the Next 20.” But he was sort of contradicted (at least in terms of the earliest date) by the next presentation, from V. Michael Bove of MIT, titled “Live Holographic TV: from Misconceptions to Engineering.”

Mark Lucente of Zebra Imaging gave a presentation titled “The First 20 Years of Holographic Video — and the Next 20.” But he was sort of contradicted (at least in terms of the earliest date) by the next presentation, from V. Michael Bove of MIT, titled “Live Holographic TV: from Misconceptions to Engineering.”

In 1962, Emmett Leith and Juris Upatnieks of the University of Michigan created what is generally considered the first 3D hologram. In 1965, they published a paper (left) in the SMPTE Journal about what would be required for holographic 3D TV (Lucente was referring to actual, not theoretical displays; see his comment below).

Perhaps live entertainment holography is still not quite around the corner. That’s okay. The SMPTE conference offered plenty of practical information that can be used today.

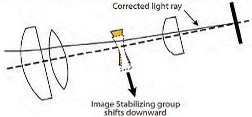

Consider “New Techniques to Compensate Mistracking within Stereoscopic Acquisition Systems,” by Canon’s Larry Thorpe. Dual-camera rigs can be adjusted so that optical centers are exactly where they should be, which is not necessarily where the camera bodies would suggest. There are tolerances in lens mounts on both the lens and camera portions that can add up to significant errors. And once zooming is added, all bets are off.

Two groups of lens elements move to effect the magnification change and focus compensation, and a third group moves to adjust focus. It’s a mess! But lens manufacturers have introduced optical image stabilization systems, one version of which is shown at right. With the appropriate controls, those stabilizing elements can be used to keep the images stereoscopically centered throughout the zoom and focus ranges.

Two groups of lens elements move to effect the magnification change and focus compensation, and a third group moves to adjust focus. It’s a mess! But lens manufacturers have introduced optical image stabilization systems, one version of which is shown at right. With the appropriate controls, those stabilizing elements can be used to keep the images stereoscopically centered throughout the zoom and focus ranges.

Then there was “S3D Shooting Guides: Needed Tools for Stereo 3D Shooting” by Panasonic’s Michael Bergeron. The presentation compared indicators used by videographers to achieve appropriate gray scale and color to indicators they might use to achieve appropriate depth.

In a similar vein, Bergeron extended the concept of the “seven-second” obscenity delay (which allows producers of live programming to cut from inappropriate material to “safe” pictures and sounds) to a “seven-second stereo delay” that would allow stereographers to cut away from, say, window violations in live programming.

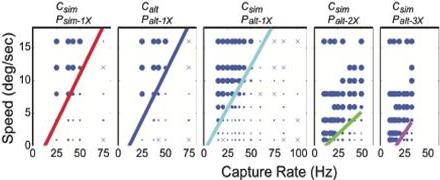

There was much more at the conference. Instead of only stereoscopic TVs with only active glasses or spatial-resolution-reducing patterns for passive glasses, a RealD presentation described a passive-glasses system with full resolution. Martin Banks, of the Berkeley lab, described the temporal effects of different frame rates and image-presentation systems (as shown above).

There was much more at the conference. Instead of only stereoscopic TVs with only active glasses or spatial-resolution-reducing patterns for passive glasses, a RealD presentation described a passive-glasses system with full resolution. Martin Banks, of the Berkeley lab, described the temporal effects of different frame rates and image-presentation systems (as shown above).

Unlike this post, which must be viewed without benefit of 3D glasses, “I Can See Clearly Now — in 3D,” by Norm Hurst of SRI/Sarnoff, was presented entirely in stereoscopic 3D. That helped audience members see how a test pattern can be used to determine 3D characteristics with nothing more than a stereoscopic 3D display. It was previewed at the HPA Tech Retreat in February (http://www.schubincafe.com/2011/03/27/ex-uno-plures/).

I’ve mentioned only about half of the presentations at the conference, and I’ve offered only a tiny fraction of the content of even those. SMPTE used dual 2K images on a 4K Sony projector to allow stereoscopic content examples to be viewed with RealD glasses but without view alternation (though even that arrangement introduced an interesting artifact identified by Hurst’s test pattern). As many of the speakers pointed out, we still have a lot to learn about 3D. And, if you didn’t attend SMPTE’s conference, you’ll need to learn more still. Better at least join SMPTE so you can read the full papers that get published in the Journal (http://www.smpte.org).

—

The following comment was received from Mark Lucente:

“As I described in my talk, holographic video had been theorized and discussed for decades (going back to Dennis Gabor in the 1950s!). However, researchers at the MIT Media Lab (Prof. Stephen Benton, myself, and one other graduate student) were the first to ever BUILD a working real-time 3D holographic display system — in 1990. The title of my talk ‘The First 20 Years of Holographic Video…’ refers to 20 years of the existence of working displays, rather than theoretical.

“On a related note, just to be sure, Emmett Leith and Juris Upatnieks made the first laser-based 3D holograms. These were photographic — not real-time video. In other words, they were permanent recordings of 3D imagery, not real-time display of moving images. They were both brilliant, and contributed to the theoretical foundations of what eventually (in 1990) became the first-ever actual working holographic video system at MIT.”